Model Convo: Cody Moser

Collective intelligence, Andrei Rublev, and dungeon synth

Welcome back to the “Model Convo” series — micro interviews with researchers in AI policy and related fields. If you know someone I should interview — maybe that person is you? — please email me.

What is your present position?

I’m a professor at the School of Collective Intelligence at Mohammed VI Polytechnic University (UM6P) in Morocco. My work focuses on how knowledge ecosystems generate, transmit, and lose knowledge using a mix of computational models and machine learning approaches. I also run a podcast with my friend, Kyle Housley, called The Horizons Review, where we discuss neglected texts in philosophy and cognition at the intersection of technology and human agency.

[You can follow Cody on X, Bluesky, and Substack. He’s also an alumnus of the Mercatus Center’s Oskar Morgenstern Fellowship and the Diverse Intelligences Summer Institute.]

What were you doing before?

I actually began my career as an anthropologist. I spent several years in the field trying to figure out how I could port tools from evolutionary dynamics to understand modern institutions, but couldn’t find the tools to do so at the time, so I left. During the period between doing that and what I do now, I worked as a visiting fellow at Harvard, interned at several think tanks in Washington, DC, but ultimately realized I had too many questions going unanswered that I could only do in academia, so I went back for a PhD in Cognitive and Information Sciences where I focused on complex systems.

How did you get into emerging tech policy?

My entry point wasn’t tech itself, but history, especially why certain places and moments become extraordinarily generative, and why collective creativity eventually fades. During my PhD, I became fascinated with Vienna at the turn of the 20th century and the broader Austro-Hungarian intellectual world. In a relatively small region, within just a few decades, you get Kurt Gödel redefining logic, von Neumann shaping computation, Boltzmann inventing statistical mechanics, and thinkers like Hayek, Szilard, Michael Polanyi, and many others developing ideas about computation and complex systems. It was one of those rare periods where an entire architecture of thought for the modern world crystallized almost at once.

So I started asking what conditions made such a culture possible. What kinds of institutions and networks allow so many ideas to emerge and why do they disappear? That line of thinking naturally led me to think about the connection between technology and culture, because we’re living through another moment where the underlying logics of the world are being rewritten by a restructuring of the role that computation, communication systems, and scientific collaborations play in developing new ideas. The questions I care about are basically the same: How do we design environments where innovation is both possible and responsible? How do we create or capture these Central European moments and develop a continuing science of collective thought?

What work of art has most shaped your views on emerging tech?

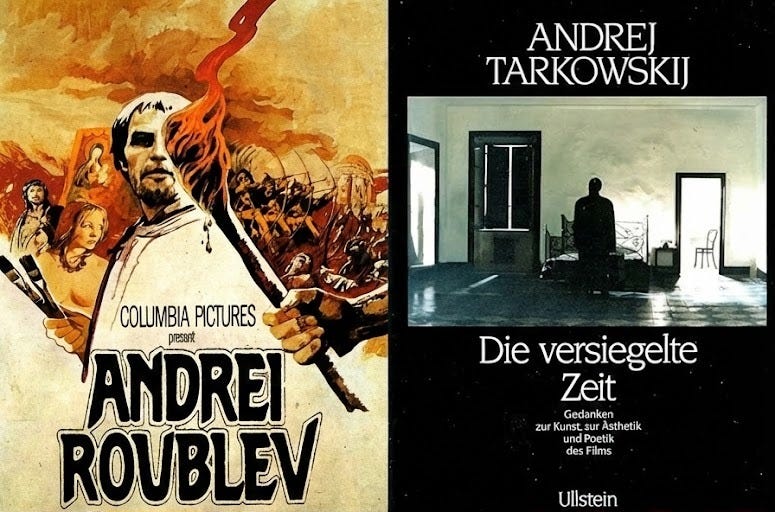

One work that has shaped my thinking is Tarkovsky’s Andrei Rublev. It’s a film about an icon painter, but really it’s about the mystery of how ideas and inspiration arrive. Tarkovsky shows creativity not as something that passes through a person, as if the artist becomes a vessel for something larger than himself. We often talk as if innovation is something we control, but Tarkovsky seems to imply that creativity depends on the social world and moral atmosphere of a person and their community.

Tarkovsky also wrote about this more in Sculpting in Time, which is a book somehow more beautiful than the film and his idea that art and thought take shape slowly, through attention and patience. That’s stayed with me. It makes me think we need to not only optimize for productivity, but cultivate environments where inspiration is possible.

What’s your most contrarian take on AI policy?

My most contrarian take is that we’ve been looking at “the human in the loop” from the wrong side. We keep talking about how to keep individual humans involved in AI systems, as if the relevant cognitive unit is just a person sitting at a terminal making choices. But the systems that generate innovation and meaning like markets, academic communities, and cultural traditions are collective cognitive systems. They have memory, bias, inertia, inference, and failure modes, just like individual brains.

What worries me is that we’re building powerful AI systems that interface directly with those social cognitive systems, not just individual minds. And those social systems are already opaque. They don’t “think” in ways that we can easily see or steer and yet they decide what counts as knowledge, what gets adopted, and what gets forgotten. We’ve been debating whether AI will replace the human, which seems to increasingly be the case, but the more unsettling question is how AI will reshape the conditions under which societies think.

The second part of this is that I believe good ideas rarely originate from the center. Historically, real breakthroughs come from the periphery. Our intellectual borderlands, dissenting traditions, and nations which are not fully absorbed into the dominant worldview are reservoirs of scientific change. If our knowledge ecosystems become increasingly centralized by opaque recommender-mediated processes and our increasingly dense social networks, we risk collapsing that generative periphery. We may get efficiency in the short term, but at the cost of discovery in the long term.

What are you reading now?

I usually have three books I’m going through at any one time: one philosophical, one historical, and one fiction. Right now, these are Ghost in the Machine by Arthur Koestler, Aristotle’s Physics, and The Book of the Dun Cow by Walter Wangerin.

Go-to emerging tech music track?

I listen to a lot of dungeon synth. Most recently Quest Master has made some interesting techno-transcendent dungeon-synth music. I went and saw him live and it blew me away. I would check out “Obscure Power.”